Online search is shifting from ‘finding websites’ to ‘finding answers.’

Instead of actually visiting websites through search engines, users are increasingly able to find the information they need through generative AI responses on platforms like ChatGPT, Claude, and Perplexity.

Even Google is transitioning to more of an answer engine with the prominence of its AI Overviews and AI Mode.

As a result, most brands need to switch gears in terms of SEO.

Earning AI citations is now the best way to keep your brand at the top of your audience’s minds, which is what GEO is all about.

Generating traffic through the organic search results is becoming less effective each day (the presence of an AIO can cause your click-through rates to drop significantly).

The game is now about learning how AI models choose which content to cite, which is what we’re diving into today.

AIs evaluate trust differently from search engines, although some basics still apply. Keep reading to discover the exact processes AI search systems use to rank content.

How Does the AI Retrieval Process Work?

First, it’s important to understand how AI systems index and retrieve online content.

Unlike classic search engines, LLMs do not practice page-wide indexing.

Instead of crawling every word on the page, AI models ingest content in small, self-contained sections called chunks.

Each chunk has a fixed limit of roughly 300 – 500 tokens.

Moreover, whenever an AI wants to retrieve online sources to help generate answers, they don’t retrieve entire pages. Rather, they retrieve specific chunks that directly relate to the user query.

For instance, imagine that a user asks ChatGPT about the pros and cons of a Keto diet.

To synthesize an answer, ChatGPT doesn’t waste its time pulling entire articles explaining Keto diets. Instead, thanks to chunking, it will retrieve short snippets directly discussing Keto pros and cons.

An example would be pulling an H3 section from an educational article about Keto diets that’s titled “What are the pros and cons of going on a Keto diet?” The H3 header and 300 – 500 tokens worth of the text that follows is all the model will retrieve, not the entire page.

It won’t just select one chunk, either. The AI model will retrieve multiple relevant snippets from several trusted sources.

This highlights another clear difference from traditional SEO. Your web pages aren’t competing against other web pages; your snippets are competing against other snippets, and they’re entirely self-contained.

That means if your content isn’t structured into clear, standalone sections that don’t venture off topic, your content may seem irrelevant to AI tools, even if the piece as a whole is fantastic and authoritative.

This is just one way that LLMs act differently from traditional search algorithms.

To get the full picture, let’s take a closer look at how AI systems retrieve and rank content.

The 4-Stage AI Retrieval Process: Prepare, Retrieve, Signal, and Serve

ChatGPT, Perplexity, Google’s AI Overviews, and other generative AI models all follow the same four-stage retrieval process when answering user queries:

Prepare the query → Retrieve content → Signal evaluation (ranking) → Serve the final answer with citations

Specific processes take place at each stage, such as retrieving online content and evaluating trust signals.

Stage #1: Preparing the query

Before an LLM can retrieve relevant online content, it first has to clean, standardize, and interpret the raw query.

This stage has two main purposes:

- Determine the intent behind the query (includes language/locale and whether the result requires an AI answer, classic SERP, or both).

- Normalize the query so that it can cleanly map with lexical indexes and vector embeddings.

It’s where the AI works to find out, “What’s being asked of me here?”

To do so, queries must be normalized, which involves:

- Lowercasing

- Removing or harmonizing punctuation

- Stemming (reducing words to their ‘root’ or ‘stem’ forms, like changing ‘running’ or ‘ran’ to ‘run’)

- Uni-coding everything (getting rid of glyphs and diacritics)

This step makes sure all the ideas in the query are expressed in one consistent form, so they can properly be compared to the embeddings inside a vector database.

Here’s an example:

Raw query: What’s a hi yield savings acct?

Normalized version: ‘high yield savings account’

Here, the AI system normalized the spelling and got rid of a question mark. The normalized version is now easily comparable to related embeddings, such as ‘finances’ and ‘bank accounts.’

If the system used the raw query, it would have trouble connecting terms like ‘hi’ and ‘acct’ to related concepts, since they don’t align with the standardized vocabulary in the index.

Stage #2: Retrieving relevant online content

Here’s where token chunking enters the picture.

Rather than parsing entire pages, AI systems retrieve and compare relevant snippets consisting of 300 – 500 tokens (or more).

Sticking with the high-yield savings account example, let’s say a user asks ChatGPT how they work.

The system would pull sections of articles discussing how high-yield savings accounts work. At this point in the pipeline, it retrieves multiple sources.

The signal stage is when the model determines which sources are the most trustworthy.

Stage #3: Evaluating trust signals

Next, AI systems use a series of seven trust signals to ensure they only cite the highest quality sources.

They are:

- Base ranking (core algorithm output)

- Gecko score (embedding similarity)

- Jetstream (cross-attention to gain more context)

- BM25 lexical keyword matching (to keep the results relevant)

- PCTR (to measure engagement)

- Freshness (AIs prefer the most up-to-date sources)

- Boost/bury (An additional layer of business policies and safety overrides)

Whether you’re optimizing for Google’s AI Overviews, ChatGPT, or Bing’s Copilot, they all use the same layers of trust signals.

Each signal has its unique place in the stack, and the order occurs for a reason.

The base ranking layer acts like a traditional search algorithm with some enhancements (like advanced semantic understanding).

Gecko and Jetstream are where the modern AI-powered signals first appear. They use vector embeddings (Gecko) and cross-attention (Jetstream) to find suitable online content.

It may come as a surprise that BM25 keyword matching is still in the mix, but it’s there as a supporting signal to ground the results in truly relevant content.

That’s because vector search and cross-attention can be vague at times and focus on things that are relevant but not quite pertinent to the user query.

By using BM25, AI systems stick to results that contain the exact language of the query, which keeps the results aligned with the user’s intent.

Also, the boost/bury layer is where things like partnerships, brand reputation, and safety policies come into play. They’re a series of signals that boost certain results (well-known brands, partners, businesses with strong reviews, etc.) and bury others (content that violates safety policies, spam, brands with bad reputations, etc.).

Stage #4: Serve the synthesized answer and citations

The final step is to put everything together and serve the user a generated answer and a series of citations.

This is when the LLM synthesizes its own answer using the top-rated chunks as building blocks. It also formats the response using inline citations, chat-style answers, and UI elements like photos and videos.

From a marketing perspective, the goal is to get your content to get pulled during the retrieve stage and then survive the signal stage so that it can appear during the serve stage.

A lot goes into this, so let’s analyze some optimization techniques from our GEO playbook.

How to Optimize for More AI Citations: Becoming a Brand AI Models Reference

At its core, GEO revolves around two things:

- Optimizing for each stage of the AI retrieval pipeline

- Boosting all seven major AI trust signals

That’s why it’s so important to understand how AI models select which content to cite. These facts literally form the foundation of GEO as a marketing practice.

Here are the most important optimization techniques you should start using right now.

Develop content as standalone, answer-ready chunks

Since AI systems ingest content in small chunks, you should structure your articles that way.

While each article should still contain a beginning, middle, and end, each subsection must be able to function by itself.

Here are some pointers:

-

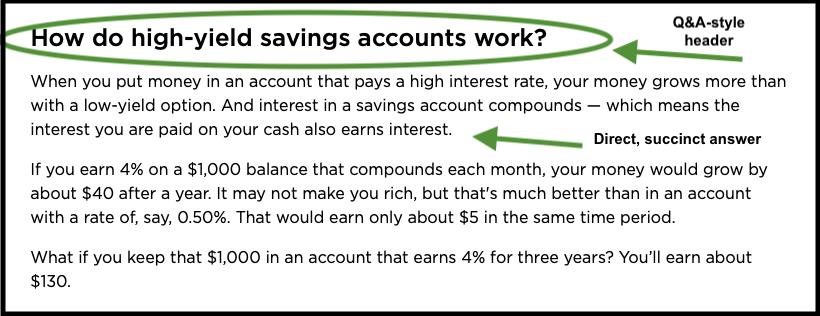

- Lead each key section with a direct answer. Each H2/H3 section needs a focused heading (like How do high-yield savings accounts work?) followed by a 1 – 3 sentence answer, definition, or key takeaway that could easily appear in an AI snippet.

- Use tables and bulleted lists. LLMs can cleanly extract information from bulleted lists and tables. Also, comparison tables are more likely to get cited than walls of text.

- Keep each subsection under 400 words. Exceeding an AI model’s token limit may cause lost context, so keep each section as short and succinct as possible.

- Follow the Q&A format for subheadings. One of the most effective ways to make each chunk self-contained is to present a question in an H2 or H3 that you immediately answer in the first sentence.

If you’re already producing cleanly structured content, you may not need to make any adjustments. Also, the classic ‘what it is, how it works, pros and cons’ outline is still very effective; you just need to make sure each section can exist on its own.

Maximize trust and authority signals

Generative engines favor content that demonstrates visible expertise and real-world perspective. For this reason, Google’s E-E-A-T (experience, expertise, authoritativeness, and trustworthiness) system works perfectly.

By that, we mean your content should include:

- Named authors

- Detailed author bios containing credentials and outbound links (their website, social profiles, etc.)

- First-hand experiences

- Original insights

It’s also crucial to have editorial backlinks from trusted news outlets, media publications, and relevant blogs. These links should convey your brand as an expert source by linking to your content, research, or products.

Brand mentions (even without backlinks) will still impact your brand’s reputation on AI search tools.

Lastly, brand sentiment plays a significant role in how AI systems decide which content to cite. Your brand should have mostly positive user reviews (across multiple platforms) and a generally positive sentiment in relevant forums and online communities.

Ensure pages are fresh and machine-readable

Schema markup isn’t optional anymore; it’s a necessity for improving AI visibility.

Using schemas like FAQPage, HowTo, Article, and Product ensures search engines and AI layers can clearly understand what each section is and when it’s best to cite it.

Semantic HTML also provides a strong structural foundation that makes schema markup more accurate and easier to implement.

Tags like <article> and <head> already convey relationships that schema markup can build upon (instead of starting from scratch with <span> or <div> tags).

Generative AI systems also have a very real recency bias, especially for queries with temporal or time-sensitive intent. Regularly refreshing and updating key pages will increase the chances they get pulled into answers.

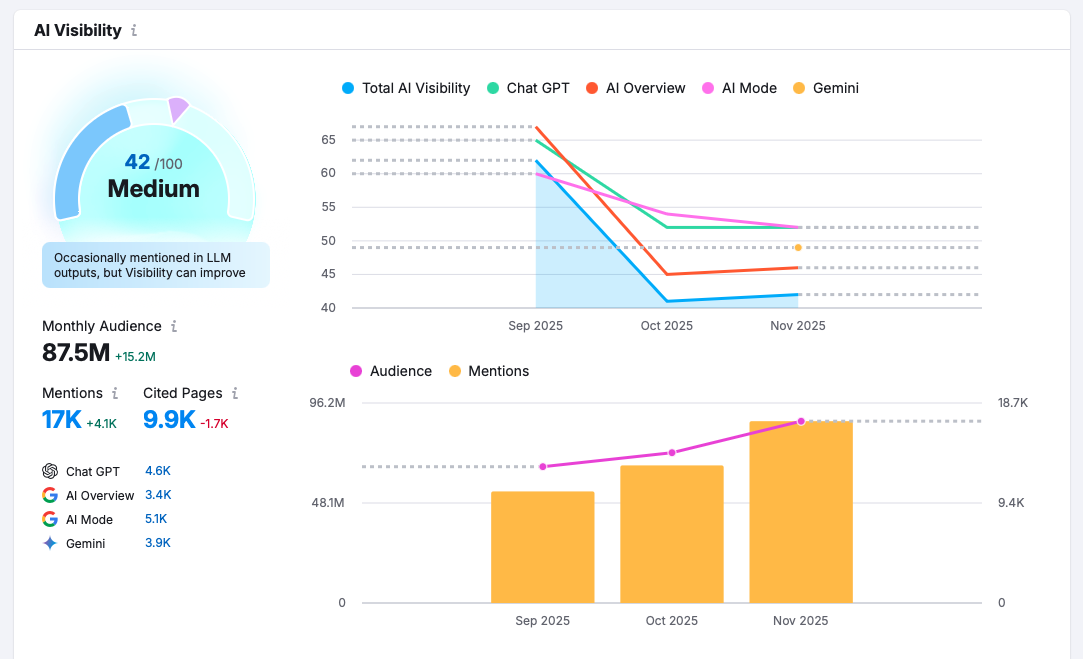

Track your progress and adjust accordingly

No campaign is complete without a way to measure your progress. With GEO, you’ll need to keep track of:

- Your total AI citations – This is the metric that captures your brand’s presence across ChatGPT, AI Overviews, and other generative AI platforms. Popular SEO tools like Ahrefs and Semrush have incorporated AI search metrics, including total AI citations.

- Which type of content earns the most citations – This knowledge informs future content strategies. By isolating the pages that earn the most citations, you’ll know how to replicate their success in the future.

- Your share of voice compared to competitors in your market – How often do you get cited versus other sites in your niche? Tools that provide AI share of voice metrics include Evertune AI and LLM Pulse.

- AI referral traffic and direct traffic – How many visitors come to your site during or after AI search sessions? You can find out by configuring custom reports and regex filters in Google Analytics 4.

Tools like Perplexity and ChatGPT pass referrer headers (like chat.openai.com) whenever users click on links embedded in their UI. The most common referrer headers are chat.openai.com, perplexity.ai, claude.ai, gemini.google.com, and copilot.microsoft.com.

By adding a custom GA4 channel group called ‘AI Traffic’ and creating a regex condition on ‘source,’ you can catch these referrals to measure your AI traffic. Also, pay attention to fluctuations in your direct traffic since some users will copy and paste URLs instead of clicking on a hyperlink provided by an AI tool.

Monitoring your progress will not only help you improve the effectiveness of your strategy, but it’ll also help you catch technical errors and runaway competitors before they become major issues.

Wrapping Up: How AI Models Choose Which Content to Reference

Understanding how generative AI models retrieve and rank content is paramount for learning how to optimize for better AI search visibility.

Token chunking, embedding similarity, freshness, and the importance of structured data are all key concepts to grasp in order to start mastering GEO.

Do you need help navigating the AI-powered search world?

Don’t wait to book a call with our team to discover the best way to ensure your brand’s online success.